Section: New Results

Tracklets Pre-Processing for Signature Computation in the Context of Multi-Shot Person Re-Identification

Participants : Salwa Baabou, Furqan Muhammad Khan, Thi Lan Anh Nguyen, Thanh Hung Nguyen, François Brémond.

keywords: Person Re-Identification (Re-ID), tracklet, signature representation.

Tracklets pre-processing/representation

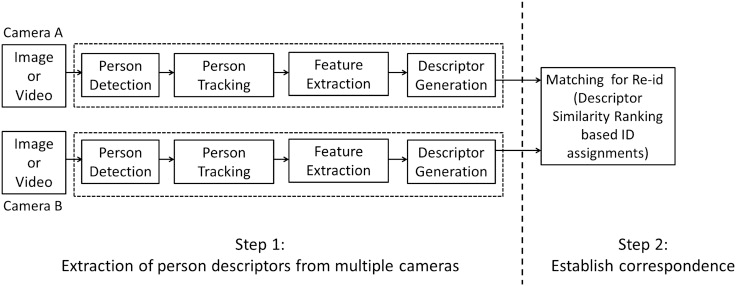

The person Re-Identification (Re-ID) system is divided into two steps: i) constructing a person's appearance signature by extracting feature representations which should be robust against pose variations, illumination changes and occlusions and ii) Establishing the correspondence/matching between feature representations of probe and gallery by learning similarity metrics or ranking functions (see Figure 10). However, appearance based person Re-ID is a challenging task due to disparities of human bodies and visual ambiguities across different cameras. Therefore, we focus on how to pre-process tracklets to compute the signature and represent it for Multi-shot person Re-ID to handle high appearance variance and occlusions.

We have worked on CHU Nice dataset. First, we used the SSD detector [91] to get the detection results. Second, inspired by the Multi-Object Tracking in [35], we extracted the tracklets of persons and then we used these tracklets to compute the signatures of individuals based on Part Appearance Mixture PAM approach [31].

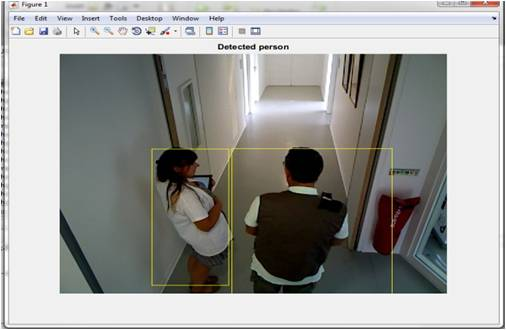

Figure 11 shows a visualizaton of a sample from CHU Nice dataset of the detection results.

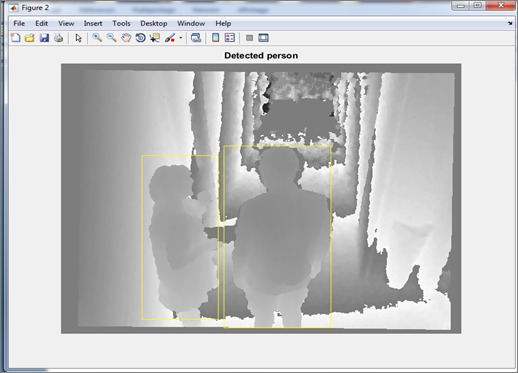

Figure 12 presents a visualization of a sample of consecutive frames from CHU Nice dataset of the tracking results.

|

Figure 13 shows a representation of some samples of tracklets of a person from CHU Nice dataset.

|

Experimental results

CHU Nice dataset

It is an RGB-D dataset (RGB+Depth) and Collected in the hospital of Nice (CHU) in Nice, France. Most of the people recruited for this dataset were elderly people, aged 65 and above, of both genders. It contains 615 videos with 149365 frames acquired from 2 cameras: one in the corridor and another camera in the room.

| Methods | Rank-1 | Rank-5 | Rank-10 | Rank-20 |

| LOMO+XQDA | 30.7 | 64.6 | 80.3 | 90.3 |

| PAM-LOMO+XQDA | 38.5 | 69.2 | 84.6 | 100.0 |

| PAM-LOMO+KISSME | 81.8 | 90.9 | 100 | 100 |

Table 4 shows the recognition rate (%) at different ranks (rank-1, 5, 10, 20) of a baseline method LOMO+XQDA [89], PAM-LOMO+XQDA and PAM-LOMO+KISSME on CHU Nice dataset. From the above experiments, we notice that PAM-LOMO+KISSME achieves good performance on three datasets; 81.8% rank-1 recognition rate. This shows that our adaptation of feature descriptor LOMO [89] and metric learning KISSME [84] to PAM representation is effective.

Limitations

As shown in Figure 14, a visualization of a selected samples from CHU Nice dataset of PAM signature representation is presented. Indeed, we visualize each GMM component by constructing a composite image. Given appearance descriptor, we compute the likelihood of an image belonging to a model component and then by summing images of corresponding person weighted by their likelihood we generate the composite image. We can say that our signature representation is able to cater variance in person's pose and orientation as well as illumination, it deals also with occlusions and is able to reduce effect of background. However, we can notice that this PAM signature representation presents some limitations, specially on our own dataset CHU Nice, which can affect the quality of our signature representation (see Figure 5). Among these challenging problems, we can cite:

-

Number of GMM components not adequate with the number of person's pose/orientation and depends of the low-level features used.

We are actually working to improve the PAM signature representation by using the skeleton and extracting the pose machines from our dataset CHU Nice.

|